The ATS Portfolio Management Process: Using Raw Trade Logs To Train An Agentic Portfolio Manager

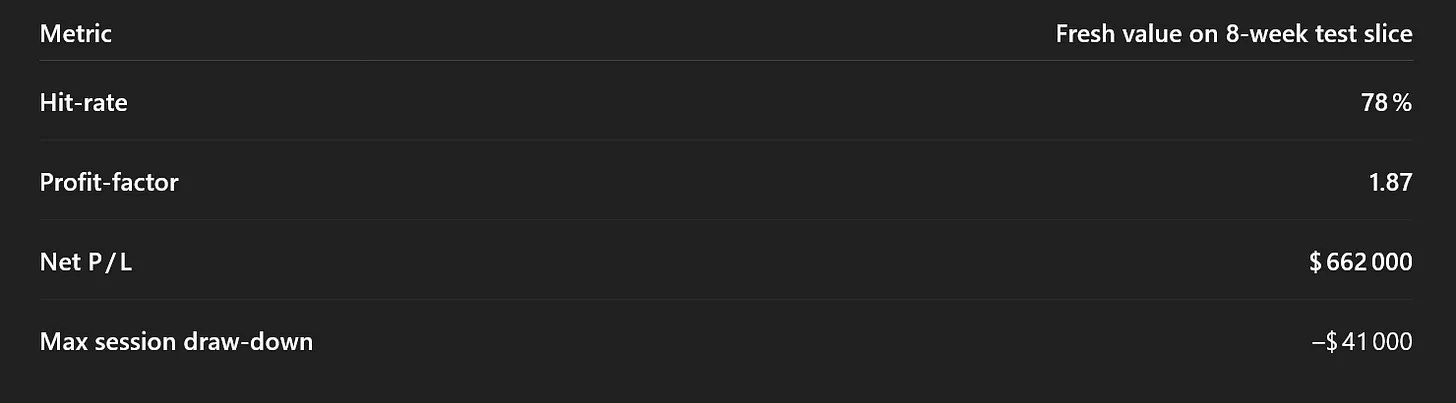

In an 8-week walk-forward test, the model produced a 78% win rate, 1.87 profit factor, and $662K net profit, with a max session draw-down of $41K.

Important: Trading futures is extremely risky. You should only use risk capital to fund live futures accounts and if you do trade live, be prepared to lose your entire account. There are no guarantees that any performance you see here will continue in the future. I recommend using ATS strategies in simulated trading until you/we find the holy grail of trade strategy. This is strictly for learning purposes.

I’m not just developing strategies—I’m on a quest for the holy grail of automated trading. Questions? Check the FAQs or feel free to reach out directly: AutomatedTradingStrategies@protonmail.com.

I want to take a moment to thank all subscribers. After reviewing subscriber details, I discovered some of you have been with ATS for over three years. Time is precious, and I'm truly honored by your support. I hope to continue providing content that inspires you. Please continue sending me your success stories—they fuel both the discovery process and my desire to forge ahead.

On that note, today is special, particularly for long-time ATS members, some of whom started this journey well ahead of me. We're discussing portfolio management—specifically, strategy selection. As I mentioned previously, I beleive the holy grail of automated trading isn't a single strategy, but rather a process. This post details the core of that process. While this isn't the only approach, it's highly doable given the potential payoff.

"If you do not know how to ask the right question, you discover nothing."

—W. Edwards Deming, father of the quality movement, Deming believed deeply in continuous improvement.

The Holy Grail Is A Moving Target

Initially, I searched for the holy grail in a single strategy—one rule set for all instruments and market regimes. About three years ago, I began pursuing a different type of holy grail. I introduced this concept in the first Mudder Report. Published on November 2, 2021, it suggested that the holy grail might be more moving target than static unicorn.

I know a few of you were with ATS four years ago when I first asked the question, and I'm honored you're still here today. I couldn't have done this without you. This post is our collective reward—it documents the nuts and bolts of the process.

The Process

There are two main steps:

Start a forward test. The first post covered why forward test data matters. The data I've collected from forward testing is priceless. Using ATS strategies will give you a head start, but you can use ANY strategy—just get the forward test (live data on simulataed accounts) started.

Start looking for patterns. After collecting at least 30 trades (or three months) on any strategy, I began focusing on strategy selection based on patterns in the data. After testing over 100 patterns, nine rose to the top. I'm sharing those nine patterns with you today.

When I first started analyzing trade data, my approach was simple:

"Give me every strategy that made > $1,000 and kept its worst intraday drawdown (Max MAE) under $2,000."

Two filters and one static report that I was very proud of.

But as the weeks and years rolled by, my questions evolved. I knew what I wanted an answer to, but couldn't figure out how to conduct the analysis. Today, with the help of several LLMs and agentic frameworks, I can finally get those quesitons answered.

Certain questions revealed more than others. I turned these questions/filters into "badges"—each representing a different performance achievement. I'll explain what those are in a moment.

Put together, this system generated $662,000 in net profits with a 78% hit rate and a 1.87 profit factor in just under two months.

Hit‑rate 78 % —Four winners for every loser.

PF 1.87 —Each $1 lost was offset by $1.87 in gains.

Max session draw‑down— -$41K –-Worst daily heat is 6 % of the gross gain, keeping the equity curve stress‑free.

Rejecting 80% of trades still leaves enough volume—on average 4–8 strategies per session—to hit 78% winners and profit factor of 1.87 while scaling the net to $662K. Even when I feed the model every trade from January onward—nearly 8,000 trades in the forward window—the 78% win rate and profit factor hold. The system isn't cherry-picked; it scales.

The Scale of What We're Dealing With

Imagine if your trading system could observe itself, learn what's working, and automatically adjust position sizes based on real performance. Not through complex AI or black-box algorithms, but through intelligent filters that answer simple questions about market behavior.

That's exactly what I built. But here's the key: This isn't a trading strategy—it's a strategy selection and position sizing system. Think of it as a smart filter that sits on top of enabled strategies, deciding which ones to trade today and how much capital to allocate to each.

I'm not running just a handful of strategies. My system monitors over 200 different strategy variations across multiple markets and timeframes:

Different instruments (NQ, GC, YM, ZB, etc.)

Different timeframes (5-minute, 33 Heikin-Ashi, 42-minute line break, 2000 tick, dollar volume, point and figure, linebreak, price on volume)

Different strategy types (momentum, mean reversion, breakout)

Different parameter sets for each

Every day, the selection model evaluates all 200+ strategies (I add 10 strategy variations weekly) and answers one critical question: "Which ones deserve my capital today?"

On any given day, the system might select 5-15 strategies out of 200+ based on which ones show the strongest performance characteristics. This is what the current alert system is based on. If you haven't already, please send in your strategy requests (2 per subscription).

Picture it as the Trading Strategy Olympics: 200+ athletes competing daily, but only medal winners get to represent your portfolio. The more medals earned, the more capital allocated.

Does the system work?

Yes, but it's not foolproof. There are times I've followed the system and lost, but with a 78% win rate, the probabilities favor us. The system self-improves, updating weights on a rolling basis weekly. It produces one EOD report, but I'm hoping to make output real-time in Q3, which should improve win rates and hopefully uncover additional "badge-worthy" filters.

Casting A Wider Net

I trained on trade data from all forward tests from January to May 15. I then forward tested the final model on the remaining two months (May 16 to July 11, 2025), and the selection system maintained its 78% hit rate.

You might wonder: "How many trades actually made it into the 78% win-rate basket?"

The system rejects roughly 70% of candidate trades each night, leaving 4-8 "cream-of-the-crop" strategies to carry capital. This keeps daily management simple while diversifying across momentum, scalpers, and calendar quirks.

This aggressive filtering is the secret sauce—we can afford it due to high portfolio trade count. The more strategies you trade, the more selective you can be, ensuring only the highest-quality setups get capital. Those filtered trades produced a 1.87 profit factor, $662K net profit, and a max session drawdown of $41K.

"In the old economy, it was all about having the answers. But in today's dynamic, lean economy, it's more about asking the right questions."

— Warren Berger, journalist and innovation expert who has studied how breakthrough ideas begin with better questions. Author of "A More Beautiful Question" and "The Book of Beautiful Questions," Berger has analyzed how designers, entrepreneurs, and creative thinkers use inquiry to solve problems and spark innovation. His work has appeared in Fast Company, Harvard Business Review, and Wired.

The Power of Asking the Right Questions

If there's anything I've learned from working with 3-5 LLMs daily, it's that my only real constraint is knowing what questions to ask. This strategy selection system uses nine filters all derived from the most fruitful questions. These nine questions were discovered using reinforcement learning.

Each filter is a 'badge’ given to the strategy for achieving the desired performance. I have the system set to recommend other filters/strategies weekly, so there's always room for improvement, but this is where we are today.

What are the filters?

I asked dozens of questions. Some were recommended by LLMs, some by students, some by subscribers. In the end, these nine made the final team. These are the questions that cut through market noise to identify which strategies are worth betting on: