Automated Trading Strategy #92: Using The Q-learning Algorithm For Analysis

Machine Learning Strategy #1

There is no guarantee that ATS strategies will have the same performance in the future. I use backtests and forward tests to compare historical strategy performance. Backtests are based on historical data, not real-time data so the results shared are hypothetical, not real. Forward tests are based on live data, however, they use a simulated account. Any success I have with live trading is untypical. Trading futures is extremely risky. You should only use risk capital to fund live futures accounts and if you do trade live, be prepared to lose your entire account. There are no guarantees that any performance you see here will continue in the future. I recommend using ATS strategies in simulated trading until you/we find the holy grail of trade strategy. This is strictly for learning purposes.

My hope was to get this post out to you on March 5, however, I ran into an issue with the MAE / MFE calculations in NT8. If you’re using NT8, the MAE and MFE calculations are not calculating properly. I’ve contacted Ninjatrader about this and they are aware of the issue, however, there is no set date for when it will be resolved. I recommend creating your own calculations for these metrics until the issue is resolved. Strategy 92 uses its own custom calculation for tracking Maximum Favorable Excursion (MFE) and Maximum Adverse Excursion (MAE), rather than relying on NinjaTrader 8's built-in functionality.

As a quick reminder, we’re on the hunt for the holy grail of automated trading strategy. If you have any questions, start with the FAQs and if you still have questions, feel free to reach out to me (Celan) directly at AutomatedTradingStrategies@protonmail.com.

AI Generated Strategies

A few years ago I started using NT8’s AI Generate to create strategies. You can find a list of those strategies at the bottom of the Strategy Description page. Unfortunately, none of those strategies performed well in various Forward Tests so today I want to introduce a new AI Generated Portfolio. Instead of being generated through NT8’s AI Generate, these strategies will be generated with the use of machine learning algorithms.

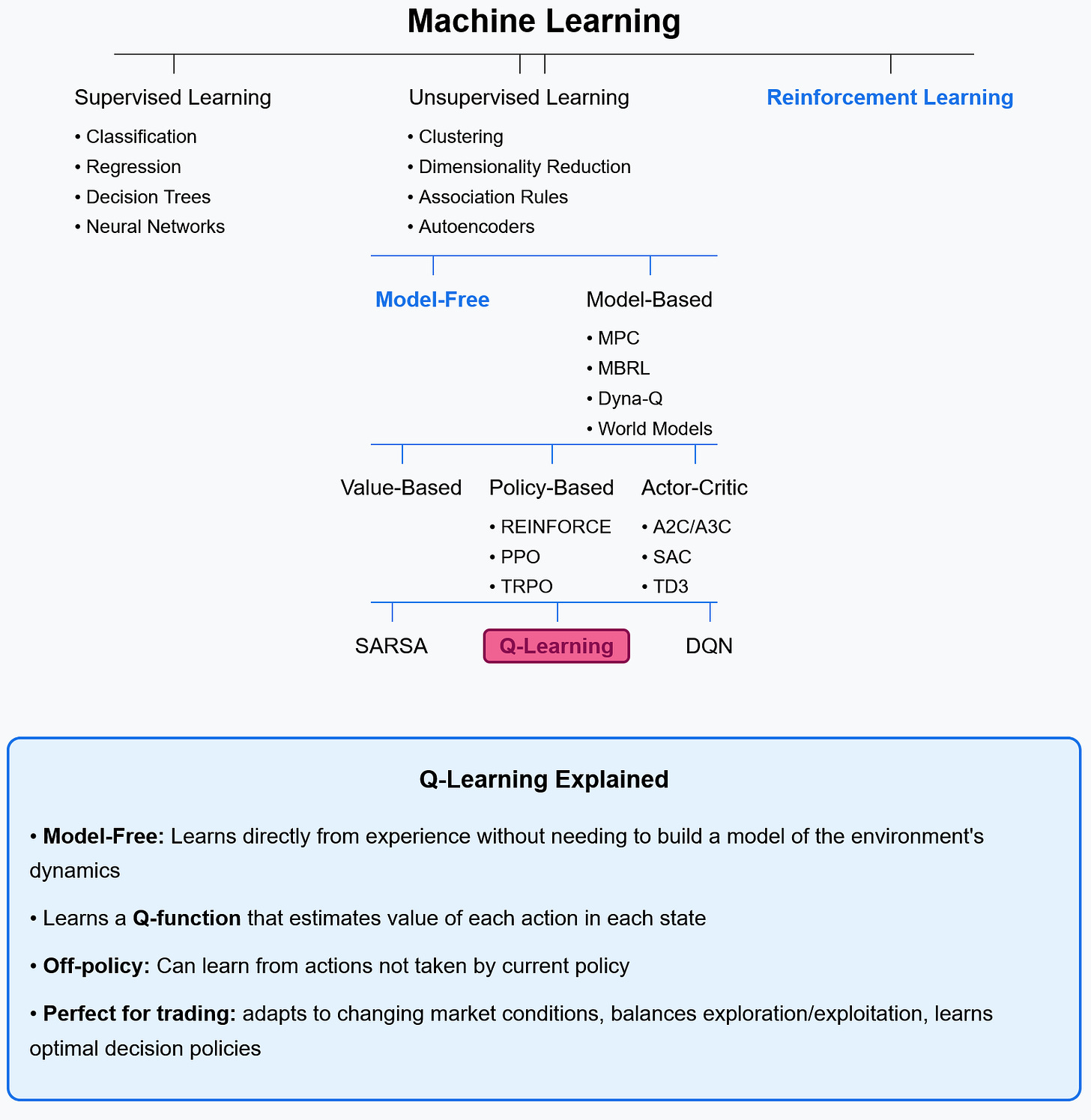

Before I get started, I want to remind everyone that I’m a trader. As a trader, what I don’t know about machine learning is a lot, but I think it’s a critical next step in our hunt. ML gives us a kind of superpower and with those powers comes a deeper level of awareness about market patterns. As I said in the last post, the challenge is learning how to apply this awareness. The goal of this series is to test the performance of various strategies created with ML. The first strategy is Strategy 92. It was created with a specific reinforcement learning algorithm called Q-learning.

What is Q-Learning?

Artificial Intelligence is the broad field focused on creating systems that can perform tasks that typically require human intelligence. Machine Learning is a subset of AI focused on creating systems that improve through experience rather than explicit programming. Reinforcement Learning is a type of machine learning where agents learn by interacting with an environment and receiving rewards or penalties based on those actions. Q-learning is a specific reinforcement learning algorithm that allows agents to learn optimal behavior through trial and error.

Q-learning is what's called a "model-free" reinforcement learning algorithm—a term that confuses people new to machine learning (like myself). In reinforcement learning, a "model" refers to an understanding of how the environment works—specifically, how states transition to other states when actions are taken. It's essentially a representation of the cause-and-effect relationships in a system.

For trading, a model would mean having equations that predict:

How the market will respond to certain conditions

What the probability distribution of next price movements would be

How different indicators will change based on market actions

So a model-based approach:

First builds an understanding of how the environment (market) works

Learns the transition probabilities between states

Plans ahead by simulating possible future outcomes

Example: "If I enter long here with RSI at 30, there's a 65% chance the price will rise 2% within the next 10 bars".

Trading environments are incredibly complex with countless variables affecting price movement. Building an accurate model of market dynamics is nearly impossible. Q-learning sidesteps these challenges by not trying to model the environment.

Model-Free RL (like Q-learning):

Skips building a model of the environment

Learns optimal actions directly from experience (trial and error)

Doesn't try to predict market movements, but focuses on action values instead (Action optimization is the trader's edge.)

Example: "I don't know how the market will respond, but I've learned that entering long when RSI is at 30 tends to be profitable in the long run"

Here’s how you can think about Q-learning within the world of ML:

Unlike "model-based" approaches that try to predict exactly how markets will respond to certain conditions, Q-learning learns directly which actions tend to be profitable in which situations through trial and error. Model-based systems try to predict what the market will do next. Q-learning doesn't care what the market will do—it only cares what you should do.

Simulating Real-World Market Conditions

First things first, we need data, and lots of it. Not just any data. We need price action that captures all the nuances of real-world NQ futures trading.

Inspired by Grok3 (trained on synthetic data to refine logic and then evaluated against actual data), I created a model that generated five years of synthetic, intraday NQ futures data. The simulation isn't just random price movement, it incorporates realistic market behaviors like:

Alternating market regimes (bull markets, bear markets, and consolidation periods)

Varying volatility clusters (calm vs. turbulent periods)

Overnight gaps and price jumps

News-driven events and occasional outliers

Intraday volume patterns that mirror actual market microstructure

Mean-reverting tendencies over short timeframes with trending behavior over longer periods

This approach provided the dataset required to use Q-learning. The next step was to run the analysis.

This is where things get really interesting.

Based on a comprehensive analysis of 5 years of synthetic NQ futures data, here are the strongest 10 signals identified through Q-learning and statistical analysis: